Norton Login

Norton Login

Protecting your devices and managing your Norton product is as easy as adding a new device to your Norton account. Follow these instructions to install or reinstall your Norton product that is registered to your account.

If you received your Norton product from your service provider, read itInstall Norton from your service provider.

Login to Norton.

If you're not already signed in to Norton, you'll be prompted to sign in. Enter your Norton email address and password and click Sign in.

If you don't already have an account, click Create an account, then complete the sign-in process.

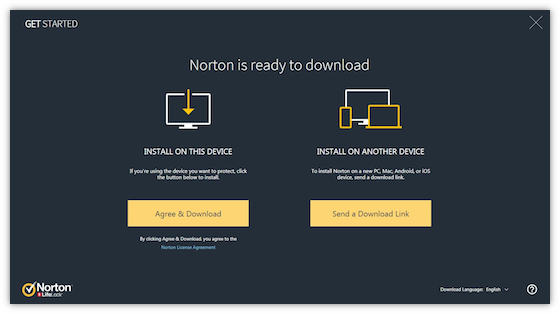

Im Get started Page, clickDownload Norton.

If you have a product key that you have not yet registered in your account, click Enter a new product key to continue.

Enter the product key and click >. Follow the on-screen instructions to activate the product.

Incorporating AI-based predictive algorithms is a hot trend in various computing systems and cloud services.

Two often-encountered examples are recommendation systems deployed in both e-commerce and online shopping services, and biometrics-based authentication. There is no doubt that easy “push-button” AI-as-a-service systems have become a trend for various business applications. A typical life cycle of AI systems includes collecting business data (often from customers), training classifiers, or regression models using that data, and then allowing external users via easy-to-use public interfaces to query to the trained model so as to perform predictions.

The data instances used to build the AI models often represent sensitive information such as online purchasing records representing habits and preferences of customers or customer facial images used in facial recognition systems. Unsurprisingly, there is a rising concern that such privacy-sensitive information can be exposed and stolen at some point in the life cycle of AI system deployment whether during collection, transfer or, publication of the prediction API.

Indeed, the issue of privacy leaks in AI systems has become a serious concern after recent controversies. This has led tech giants to rethink their AI plans due to privacy concerns. For instance, Google announced in 2017 that they are moving away from their plan to publicly release cloud-stored medical images. Similarly, Amazon provided tools to delete cloud-stored data after a Bloomberg report revealed a data privacy issue, where human audio files from Alexa devices were annotated by contract workers. According to a recent article by the New York Times, Clearview.ai, a facial recognition service provider, downloaded more than three billion photos of people from the internet and social media platforms and used them to build facial recognition models without acknowledgement or permission.

Furthermore, many academic researchers have also reported the issue of privacy leakage in AI models, especially deep neural networks, which result from access to (even anonymized) data and prediction APIs by adversarial clients.

In general, building an AI model can be considered a process of signal transformation: the input signals — for example, the product or sum of the collected data instances — are fed into the AI model. These input signals travel through sequences of mathematical operations and help to tune the parameters of the AI model until the model can deliver accurate predictions.

Despite the transformation process, uncovering privacy-sensitive data instances is still possible in a couple of ways.

First, the collected data sets are vulnerable, because they are not well hidden, and they are usually stored in centralized repositories that are vulnerable to data breaches.

Second, with access to the prediction API, adversaries can conduct reverse engineering to either determine whether a single or several data points exist in the data set used to train the AI model, or reconstruct the statistical profiles of the training data instances. These reverse engineering-based threats are known as membership inference and model inversion attacks.

An even more alarming reality is that, in some cases, removing sensitive attributes from a data set does not guarantee that the adversary can’t recover them. Attackers can use Generative Adversarial Networks (GANs) to generate synthetic data instances that resemble the true (original) data instances used to train the AI models, and infer the redacted details.

To be fair, looking back at the history of the AI revolution, statistics-based AI models — including the fancy Deep Neural Nets — were not designed and developed to be privacy-aware, let alone privacy-preserving. On the contrary, all of them are aimed to unveil statistical associations between data and attributes and encode data instances’ probabilistic distribution into the model. Exploitation of statistical knowledge has been the driving force behind the magic of all AI models, which can also be thought of as the primary cause of the susceptibility of AI models to privacy-stealing attacks.

How to design privacy-preserving AI models and systems is a demanding problem in the era of AI. As an echo, some emerging techniques, such as Federated Learning and Differentially Private Machine Learning (DPML), have been proposed recently, aiming to provide promising solutions.

Federated Machine Learning (FML) is a decentralized AI model training mechanism. It builds a common machine-learning model, without transferring and sharing data instances from customers’ personal devices (for example, mobile phones, laptops and iPads). During the AI model training process, personal data never leaves the host devices. In each round of the training process, computing the AI model’s parameters is conducted locally and economically at each of the customers’ devices. Only the model parameters computed by each local device are then aggregated in a centralized server and shared back to the local devices to update their local models.

In contrast, classical distributed Machine Learning-as-a-service systems always need to collect (centralize) and store customers’ data in their own databases. Compromising such a database would definitely result in a severe privacy leak incident.

Google has deployed Federated Learning in production for personalization in its Gboard predictive keyboard across “tens of millions” of iOS and Android devices. Google recently released a Federated Learning module in its TensorFlow framework, which makes Federated Learning easier to experiment with deep learning and other computations on decentralized data.

NortonLifeLock Labs have also been experimenting with Federated Learning — in order to be a leader in the security, privacy, and identity industry — by applying this cutting-edge technique in our research prototypes.

Differential privacy defines a privacy-preserving information publication mechanism which releases a data set describing accurately a group of subjects’ patterns while withholding fine details about each of the group’s individuals. It is performed by injecting a small amount of noise into the raw data before it’s fed into a local machine learning model, such that it becomes difficult for adversaries to reverse or estimate the original profiles of the training data instances. Intuitively, the injected noise disturbs the output of the AI model.

By accessing the blurred and noisy output, the reverse-engineering capability of the adversary is greatly weakened. Indeed, an AI model can be considered differentially private if an observer seeing its output cannot tell if a particular individual’s information was used in the computation. A differentially private training process of AI models then enables nodes to jointly learn a usable model while hiding what data the data owners might hold.

The TensorFlow Privacy library, operates on the principle of differential privacy. Specifically, it fine-tunes models, using a modified stochastic gradient descent that averages together multiple updates induced by training data examples and clips each of these updates while adding noise to the final average. This prevents the memorization of rare details, and it offers some assurance that two machine learning models will be indistinguishable whether a person’s data is used in their training or not.

Besides the two mainstream privacy preserving learning methods, NortonLifeLock Labs researcher Dr. Saurabh Shintre, has recently proposed a right-to-erasure mechanism to defend against the privacy-stealing threat in AI systems, such as membership and attribute inference described in the previous section. These types of attacks demonstrate that AI models can act as indirect stores of the personal information used to train them.

Therefore, to allow data providers to make better privacy control settings, NortonLifeLock Labs proposed methods to delete the influence of private data from trained AI models. Apart from privacy reasons, this capability can be also be used to improve both the security and usability of AI models. For instance, if poisoned or erroneously crafted data instances are injected into the training data set, this technology can help to unlearn the unexpected effects of the poisoned data.

The core idea of this work is to use a mathematical concept known as influence functions on AI models. These functions can be used as a tool that is capable of measuring the effect of a training point on the AI model’s parameters and its behavior during test time. Specifically, these functions can measure the change in the model's accuracy at a test input when a training point is removed from the training set. In other words, they can serve as control mechanisms for the quality of the AI model created, given a set of training data. With this formulation, influence functions can be used to implement right to erasure in existing models.

When a certain user requests for their data to be removed, the data processor must identify all the machine learning models where the user’s personal data was used for training. Having complete access to the model parameters, the processor can compute the new parameters when the user’s data is removed from the training set. These new parameters can be trivially computed by measuring the influence of the user's data and then modifying the parameters accordingly. Influence functions also allow a neutral auditor to audit and confirm that the request to erase data was completed.

Unfortunately, no perfect method has yet been discovered to guarantee a good trade-off between data privacy protection and the utility of AI systems. State-of-the-art privacy-preserving machine learning methods, including Federated Learning, Differentially Private Learning, and learning mechanisms with encrypted data, are still in their infancy.

For example, Federated Learning is vulnerable to injected data noise and unstable computation and communication support. Besides, the data privacy issues persist if the adversary can access the model parameter aggregation module hosted by the central parameter server in Federated Learning.

Differential privacy has been criticized for causing too much utility loss by randomly changing training data and/or learning mechanisms.

Learning with encrypted data is far from being practically useful. Encryption and decryption of data instances is computationally heavy, which is the major bottleneck preventing its use in large-scale data mining. Though homomorphic encryption provides a sounding framework, it is only compatible with a highly narrow band of mathematical operations. The use of homomorphic data encryption is thus limited to simple linear machine learning models.

Nevertheless, these methods open a new research field aiming to embed privacy protection into the design of machine learning algorithms. Byzantine-Resilient Federated Learning, Renyi Differential Privacy, and privacy discount offer further improvements in training Deep Neural Networks.

Despite having a long way to reach a perfect solution, exploring along these directions will surely lead us toward improving user protection by making data privacy and AI analytics seamlessly integrated.

No one can prevent all identity theft.

† LifeLock does not monitor all transactions at all businesses.

* Reimbursement and Expense Compensation, under the LifeLock Million Dollar Protection™ Package does not apply to identity theft loss resulting, directly or indirectly, from phishing or scams.

Click Agree and download

If you have more than one Norton product in your account, select the product you want to download and click Next.

Click Load More if the product you want to download does not appear in the list.

To install on another device, click Send a download link. To read I want to install my Norton product on another deviceto continue installing on another device.

Depending on your browser, do one of the following:

For Internet Explorer or Microsoft Edge browser: Click Run.

For Firefox or Safari: Click the Download option in the upper right corner of the browser to view the downloaded files and double-click the downloaded file.

For Chrome: Double-click the downloaded file at the bottom left.

If theUser Account Control window appears, click Continue.

Follow the on-screen instructions.

Your Norton product is now installed and activated.

Norton offers additional features in the form of ransomware protection and parental controls. Norton Antivirus has monthly pricing available with no commitment. As you would expect, there is a bigger discount for annual rates. The Norton download, unlike many other antivirus programs, gives you the opportunity to to be paid as required. The prices for a single device are already reasonable, so a multi-device plan is far cheaper than the competition. Norton download packages offer rate backup features but are safe and secure. Norton download offers a free antivirus program for Windows and Android, which offers a price reduction. For example, you could purchase a single user plan for your desktop and still use Get protection for your mobile device with a free plan.

Once the installation is complete, the Norton download will perform a full scan and set a baseline for you System. The scan runs in the background and, as far as we know, is faster than a normal scan. Since this is a light test, you can continue to use your machine. Additional scans may be This is done using the magnifying glass icon at the bottom of the interface. There are three scans Modes: full, custom and what we call “critical areas”.

As you scroll down, you'll see more icons - 23 for Premium Security. Each icon represents the different components of your antivirus package, from document shredders to password managers. There are also other utility icons, e.g. B. Support and products. You can access the last two settings, by clicking on the three line icons at the top left of the interface. This menu is a more familiar antivirus control panel where you can control your devices, Manage products and settings.

The Anti-Malware Standards Organization's review of desktop antivirus functionality settings was the first set of tests conducted. Norton blocked all files from downloading and also blocked download files from being generated. Overall, nNorton's built-in download protection was more effective in these tests. Norton Security is an affordable antivirus program with a feature set that meets all expectations. However, with a strong VPN and password manager offering, this shine remains unfounded. The same applies to the interface, which stumbles when pressure is applied.

Norton download is considered one of the safest antivirus programs on the market. In addition to offering near-perfect malware detection scores, Norton Antivirus offers great value for money with a wide range of additional tools. You'll find a built-in password manager, a secure browser, VPN client software, protection against encrypting ransomware, and automatic profiles that optimize Norotn's impact on your system depending on whether you're working, watching a movie, or playing a game .

| Free Intel Norton Partner Program ecosystem | ||||||

| Program category | Resell | technology | ||||

| Partner type partner level |

Solution provider

platinum

Gold

splinters

|

distribution Broadline

Value added

|

|

|||

| Managed Services specialization | Ja | Ja | Ja | |||

| Service delivery Specialization* | Ja | Ja | Ja | Ja | ||

| Support provider Specialization** | Ja | |||||

| partnership Advantages |

|

|||||

*Service Delivery specialization will be Available in the second half of 2015.

**Former Norton authorized support provider program (MASP)